GCReID: Generalized continual person re-identification via meta learning and knowledge accumulation

Background

Person re-identification (ReID) has made good progress in stationary domains. The ReID model must be retrained to adapt to new scenarios (domains) as they emerge unexpectedly, which leads to catastrophic forgetting. Continual learning trains the model in the order of domain emergence to alleviate catastrophic forgetting.

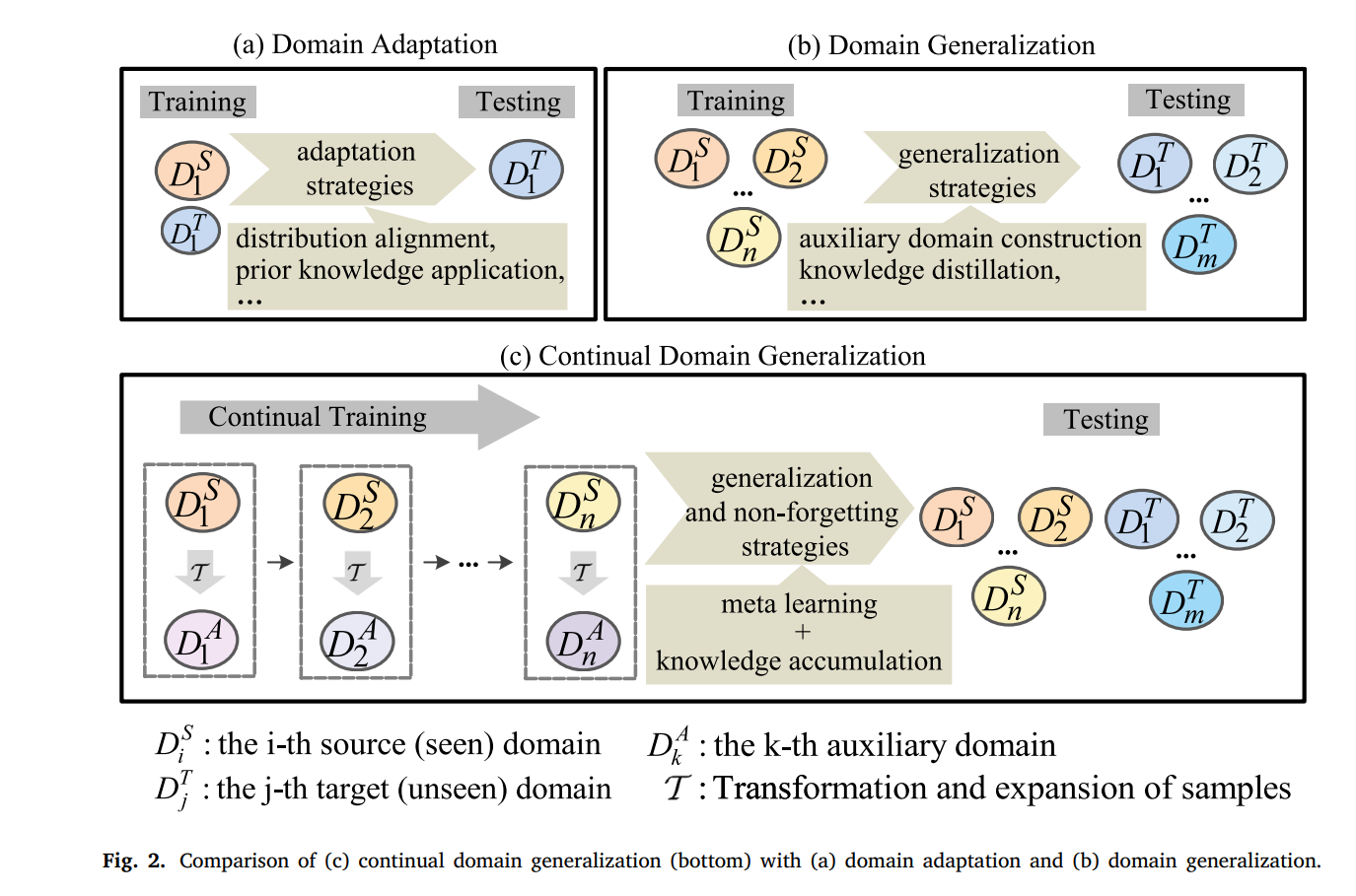

However, continual person ReID performs terribly if it is directly applied to unseen scenarios as shown in Fig. 1. That is to say, its generalization ability is limited due to distributional differences between domains participating in training and domains not. Domain adaptation and domain generalization are helpful for continual person ReID improving its performance on unseen domains. As shown in Fig. 2(a), the standard domain adaptation requires a subset of unseen domains to participate in training to guarantee a better adaptation on the unseen domains. Domain generalization requires multiple domains to participate in training to enhance generalization. That is to say, as shown in Fig. 2(b), source domains have to be accessible in advance at the same time.

In most cases, scenarios change in order, i.e, source domains arrive with priority as shown in Fig. 2(c) rather than being accessible simultaneously. Continual domain generalization in Fig. 2(c) has to consider not only catastrophic forgetting under the continual learning paradigm (domains arrive with priority), but also the generalizability on unseen domains. Therefore, similar to resisting forgetting existing in a representative of continual learning, meta learning based parameter regularization has been introduced into this field to improve generalization of the model.

Our ContributionsIn this paper, we enhance generalization of continual person ReID for the aspect of sample diversity and distribution difference. Our main contributions are summarized as follows.

We simulate unseen domains according to priori to enhance sample diversity.

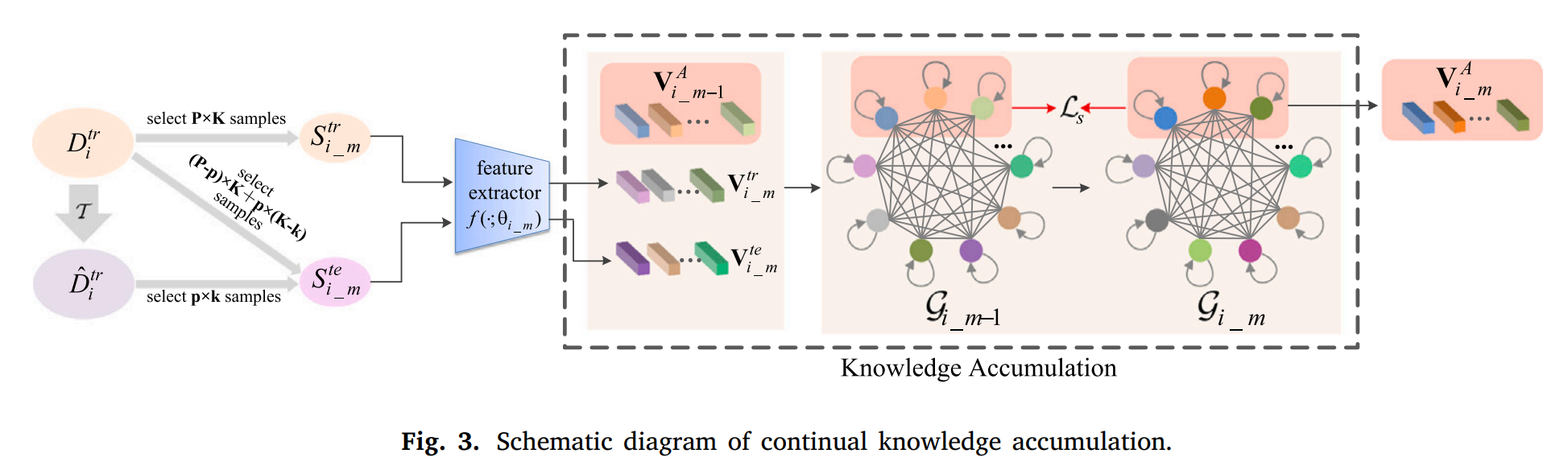

A fully connected graph is proposed to store accumulated knowledge learned from all seen domains and the simulated domains.

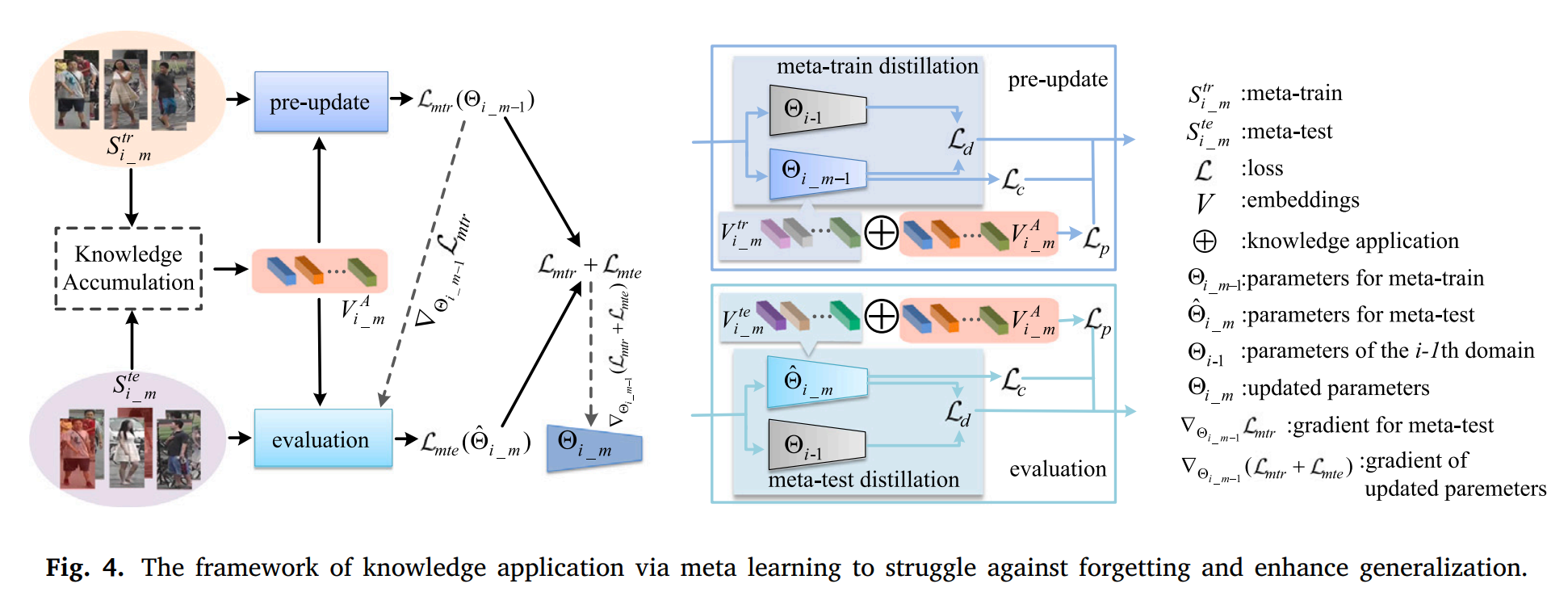

Meta-train is used to extract new knowledge from the current domain.

Meta-test is used to extract potential knowledge from unseen domains which is simulated according to priori.

The above knowledge are gathered together to update accumulated knowledge via the graph attention network.

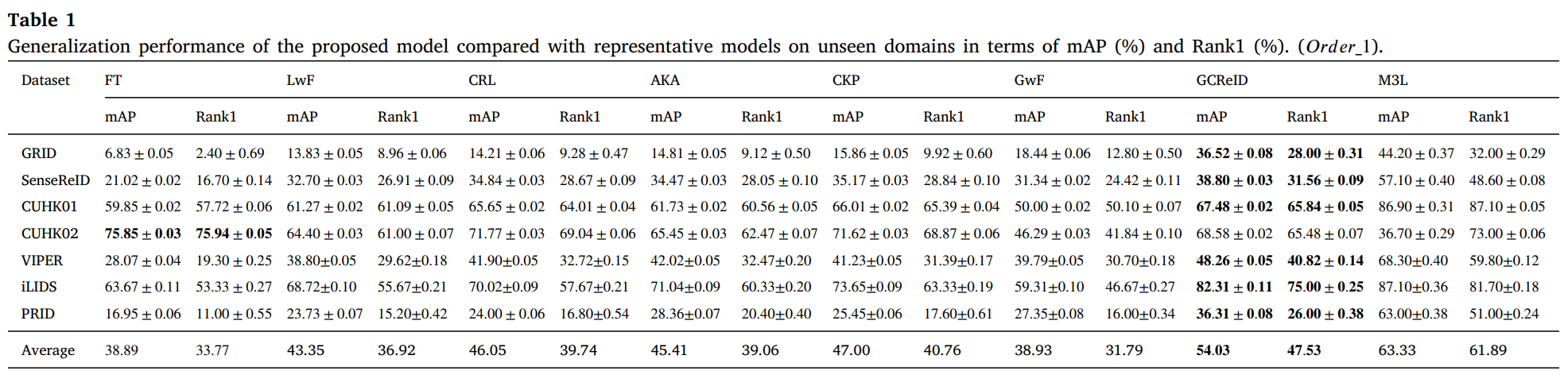

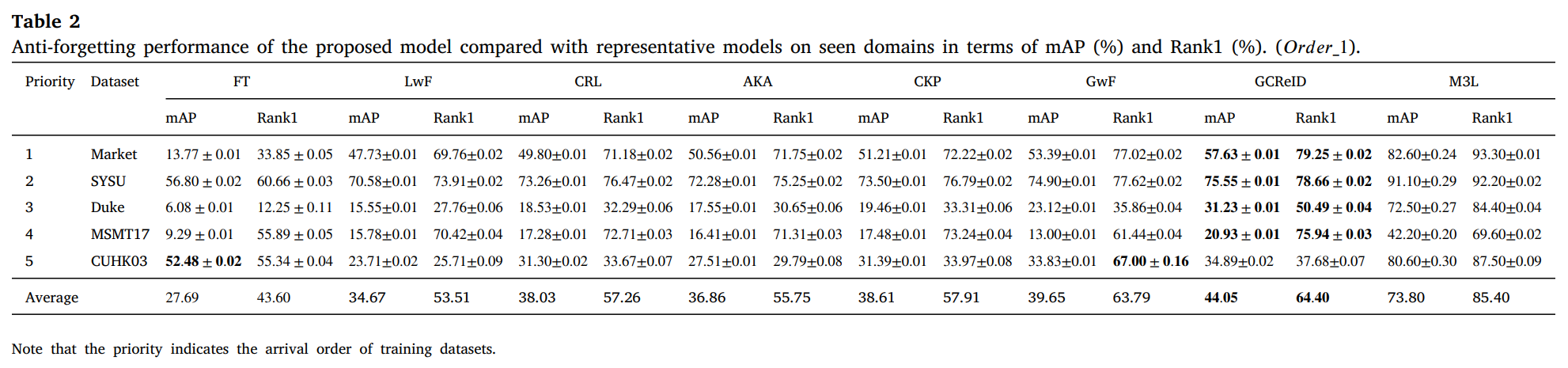

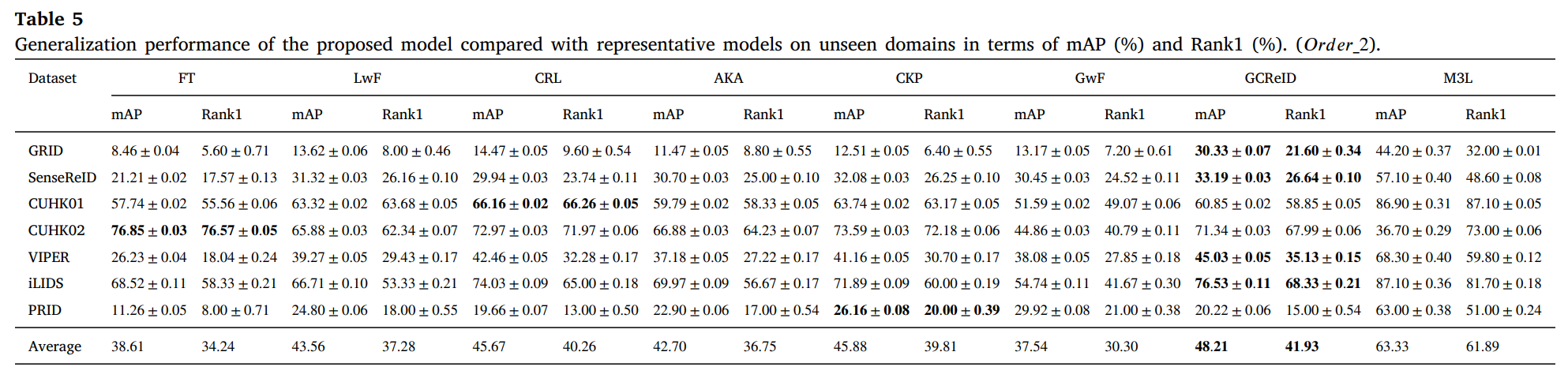

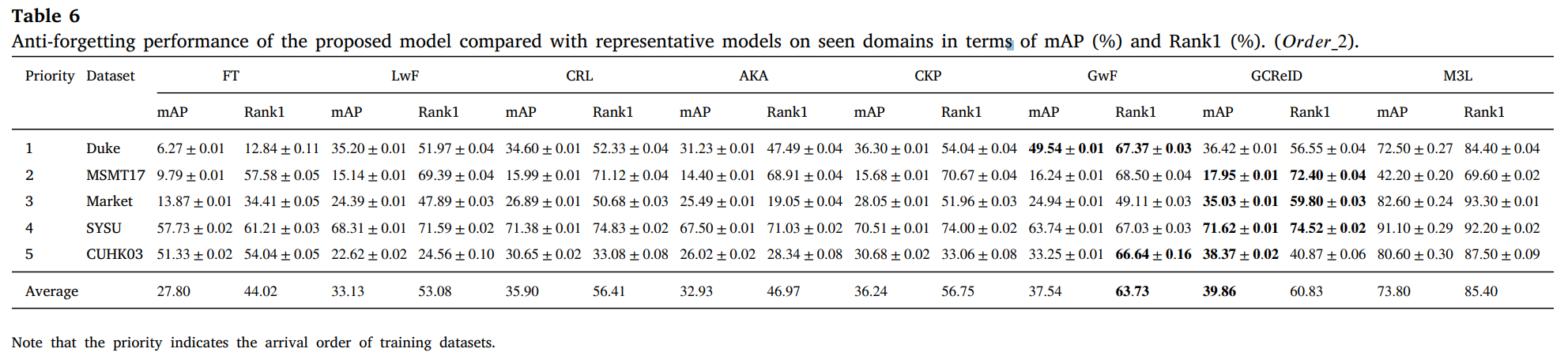

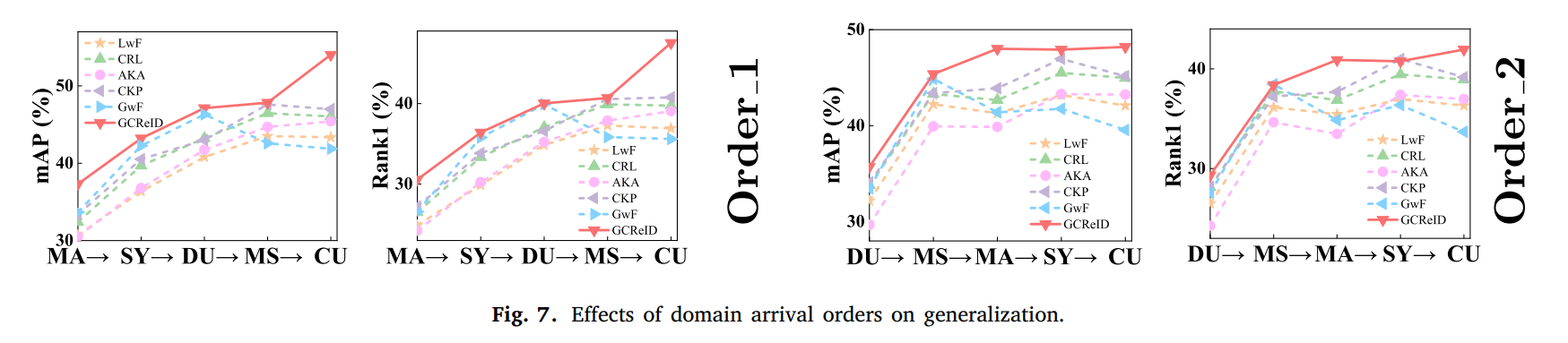

We evaluate the proposed method and compare it with 6 representative methods on 12 benchmark datasets.

We conduct experiments on 12 person ReID benchmarks, namely Market, CUHKSYSU, DukeMTMC-reID, MSMT17, CUHK03, Grid, SenseReID, CUHK01, CUHK02, VIPER, iLIDS, the mean average precision (mAP) and Rank1 are used to evaluate performance on the datasets. Datasets are downloaded from Torchreid_Dataset_Doc and DualNorm. We compare the proposed model GCReID with 7 methods, namely Fine-Tuning (FT), Learning without forgetting (LwF), continual representation learning(CRL), adaptive knowledge accumulation(AKA), continual knowledge preserving (CKP), generalizing without forgetting (GwF), and memory-based multi-source meta-learning (M3L).

More detials please see our paper.

The code is available at GCReID.

Citation: The author who uses this code is defaultly considered as agreeing to cite the following reference @article{liu2024gcreid, title={GCReID: Generalized continual person re-identification via meta learning and knowledge accumulation}, author={Liu, Zhaoshuo and Feng, Chaolu and Yu, Kun and Hu, Jun and Yang, Jinzhu}, journal={Neural Networks}, volume={179}, pages={106561}, year={2024}, publisher={Elsevier} } }

扫描查看移动版

校址:辽宁省沈阳市和平区文化路三巷11号 | 邮编:110819